RelayPlane vs. LiteLLM: More Than Just a Model Router

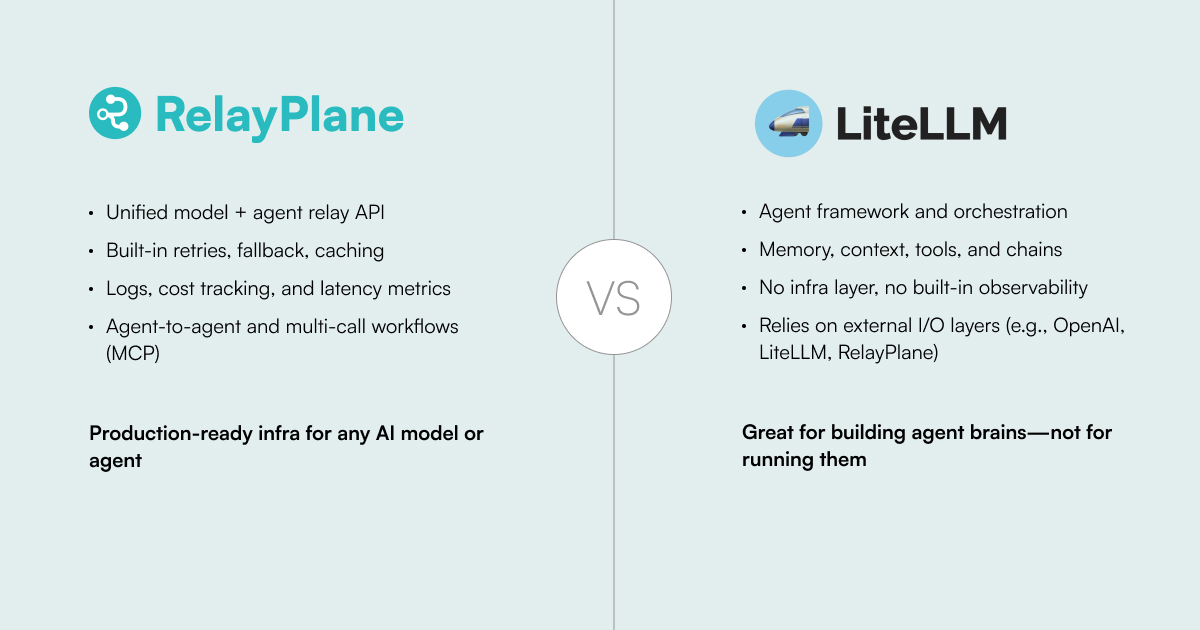

LiteLLM gives you access to multiple LLMs with a single API. RelayPlane goes further—handling retries, failover, agent-to-agent workflows, and full observability. Here's how they compare.

RelayPlane vs. LiteLLM: More Than Just a Model Router

TL;DR: LiteLLM is great for getting started with multi-model access. RelayPlane is built for production AI workflows with agent orchestration, observability, and infrastructure management.

At a glance, RelayPlane and LiteLLM look similar: both provide a way to access multiple large language models (LLMs) like GPT-4, Claude, and Gemini via a single API. But when you dig deeper, their focus, capabilities, and use cases are very different.

This post breaks down what each tool does—and why RelayPlane is built for production-level AI infrastructure.

LiteLLM: A Helpful Developer Shortcut

LiteLLM is great for getting started. It lets you call multiple model APIs with a unified interface and supports key features like:

- Simple SDK to access 100+ models

- Optional observability via plugins

- Local or hosted deployment options

RelayPlane: An AI Control Plane

RelayPlane is more than an API wrapper. It's a control layer that handles everything your backend would otherwise need to orchestrate across vendors and agents:

✅ Production-Ready Routing

- Automatic failover and retry logic

- Caching for reduced latency and cost

- Chain-of-call support (MCP): pass data between models and agents

✅ Full Observability

- Built-in logging: input/output, latency, errors

- Cost tracking per call, model, and agent

- Usage dashboards, alerts, and rate limiting

✅ Agent-to-Agent Workflows (A2A)

- Send a message to an agent that responds by triggering others

- Useful for chaining Claude → GPT-4 → Gemini

- Plugin SDK for custom behaviors (coming soon)

✅ Bring Your Own Keys

- RelayPlane doesn't resell model access

- You use your own API keys for each model or vendor

- Avoids markup, maintains direct control

Example: RelayPlane in Action

Here's how a multi-agent task works in RelayPlane:

const res = await relay({ to: "claude", payload: { messages: [{ role: "user", content: "Summarize this meeting transcript" }] }, next: [ { to: "gpt-4", payload: (res) => ({ messages: [{ role: "user", content: res.summary + " → Create action items." }] }) }, { to: "gemini", payload: (res) => ({ messages: [{ role: "user", content: res.actionItems + " → Create a slide deck." }] }) } ] })

Feature Comparison

| Feature | LiteLLM | RelayPlane |

| Unified LLM API | ✅ | ✅ |

| Agent workflows (MCP) | ❌ | ✅ |

| Built-in logging + metrics | ⚠️ | ✅ |

| Retry + failover | ❌ | ✅ |

| BYO API keys | ✅ | ✅ |

| Web UI & dashboards | ❌ | ✅ |

| Plugin SDK (custom logic) | ❌ | 🟡 |

When to Choose Each

Choose LiteLLM if you:

- Need quick multi-model access

- Are prototyping or testing prompts

- Want minimal setup overhead

- Have simple, single-call workflows

- Need production-grade reliability

- Want built-in observability

- Have multi-agent workflows

- Need cost optimization & alerts

The Bottom Line

If you're just experimenting with prompts, LiteLLM is a solid tool. But if you're building production workflows, managing costs, or routing between agents and vendors, RelayPlane gives you the infrastructure foundation you need.

It's not just about calling models—it's about shipping AI features faster with reliability, observability, and modularity built-in.

Ready to try RelayPlane? Join our waitlist to get early access to the AI control plane that handles routing, observability, and agent orchestration out of the box.